Introduction:

Which is the Right Platform for Your Data Needs?

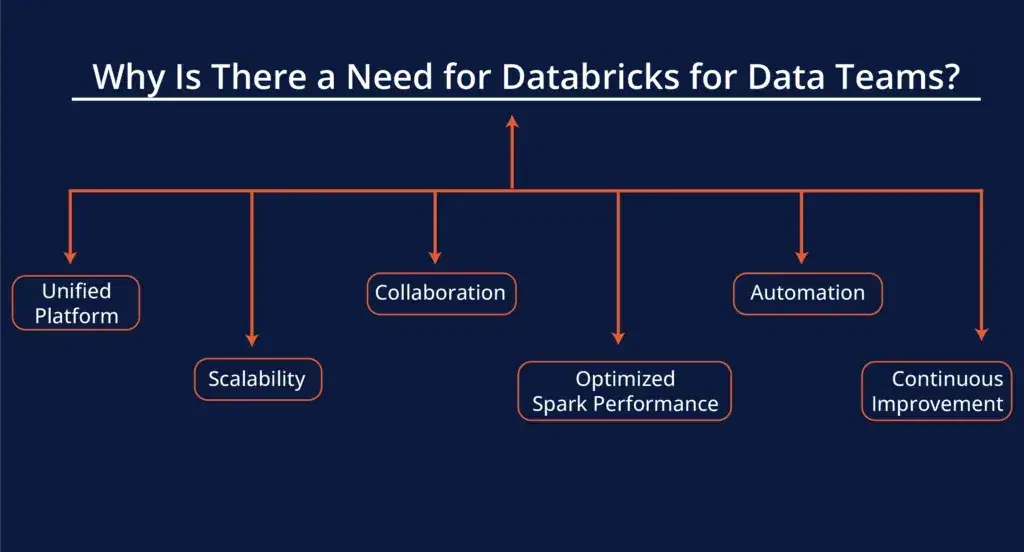

Why Is There a Need for Databricks for Data Teams?

- Unified Platform: Databricks integrates data engineering, machine learning, and analytics on one platform, making collaboration easier.

- Scalability: It handles massive datasets efficiently, scaling resources automatically to meet needs.

- Collaboration: Teams can work together in real-time through notebooks and shared workflows.

- Optimized Spark Performance: Databricks is built on Apache Spark, offering faster processing and improved performance.

- Automation: It supports automated workflows, reducing manual tasks and improving productivity.

- Continuous Improvement: With automation. Resource scaling and much more it serves continuous improvement.

8 Best Insider Tricks to Save Time and Optimize Databricks.

1. Organize Your Workflows with Databricks Notebooks

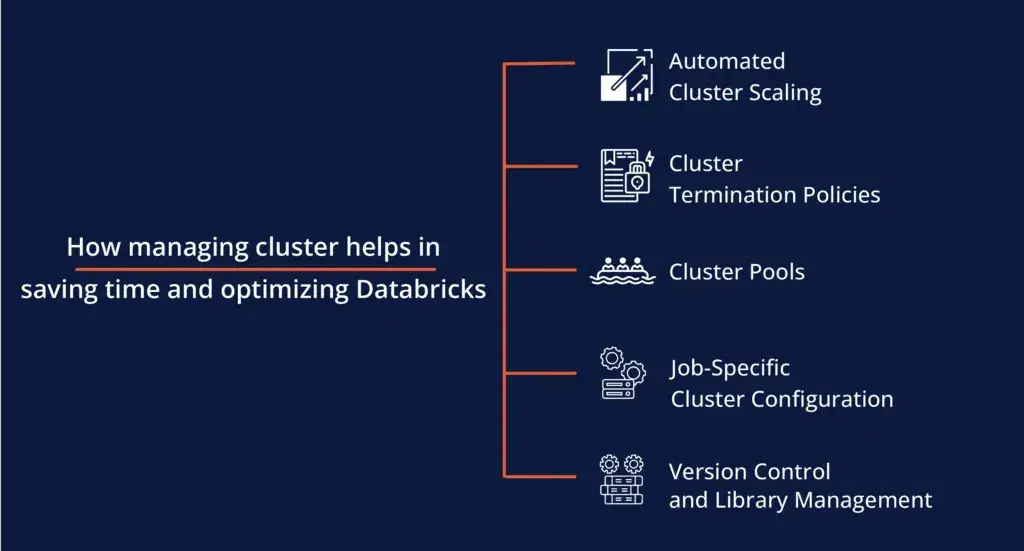

2. Try Databricks Clusters for Efficient Processing

- Use Auto-Scaling Clusters: Instead of managing the number of nodes manually use auto-scaling to manage it. This feature automatically adjusts cluster size based on the workload. It will also be helpful for saving both time and money along with continuous improvement.

- Terminate Idle Clusters: Clusters that are running but not in use can waste your cost management. Set automatic termination for idle clusters to keep expenses in check.

3. Take Advantage of Databricks Workflows

- Use Multi-Task Workflows: You can define workflows with multiple dependent tasks. For example, start a machine learning process only after your data cleaning pipeline is completed successfully.

- Alerting: Set up alerts for failed steps so you can take immediate action when something goes wrong. This feature will help you to avoid time delays and future errors.

4. Improve Performance with Databricks Caching

- Use Dataframe Caching: You can avoid reading from storage repeatedly by caching your data properly. It will also help in speeding up your operations.

- Selective Caching: Be strategic about which datasets to cache. Focus on frequently accessed data or time-consuming data.

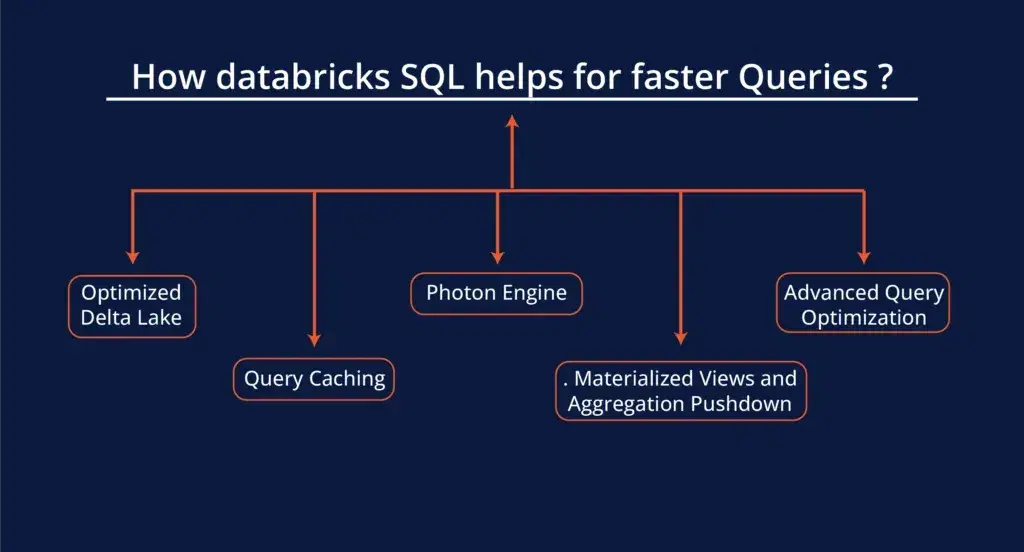

5. Use Databricks SQL for Faster Queries

- Optimize SQL Queries: Always write efficient SQL queries by using filters. Keep your data to the limits. Never overload data in your queries. This not only improves query time but also reduces costs.

- Query Execution Plans: Review your query execution plans in Databricks SQL to understand how the data is processed. It helps in identifying the areas where optimizations can be applied for better performance.