Introduction:

Which Is Better Databricks or Snowflake for Your Data

- Databricks: Best platform for data engineering, machine learning, and AI related projects. Its strength is its real-time data analytics and big data processing.

- Snowflake: It is Primarily used for data warehousing. It is excellent for structured data storage and querying but lacks the advanced machine learning capabilities like Databricks.

Why Databricks Important for Business Success?

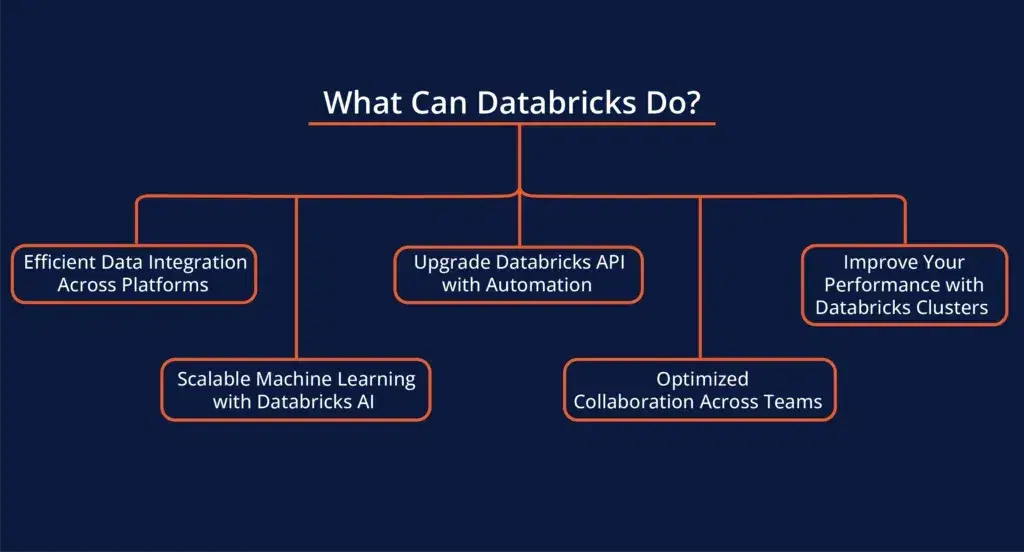

What Can Databricks Do? Explore Its 5 Secret Features

1. Efficient Data Integration Across Platforms

- Cross-Cloud Flexibility: You can move workloads between Azure and AWS without losing any efficiency or data consistency. This makes it easy to scale and manage your data no matter where it’s stored.

- Unified Analytics: With a single platform for data engineering and analytics, your teams can collaborate effortlessly. It also helps in reducing delays and confusion in data workflows.

2. Scalable Machine Learning with Databricks AI

- Pre-Built Models: Start with machine learning easily using Databricks AI’s library of pre-built models. No need to build your data models from scratch.

- Custom ML Models: Advanced users can build and deploy custom models at scale with Databricks. This makes machine learning accessible to all organizations, not just tech experts.

3. Upgrade Databricks API with Automation

- Automation of Repetitive Tasks: Use the Databricks API to schedule daily tasks including data refreshes, cluster management, or model retraining. No requirement to do it manually.

- Integration with CI/CD Pipelines: You can integrate easily Databricks API into your CI/CD pipelines. It will assure you flawless updates and deployments of your data projects.

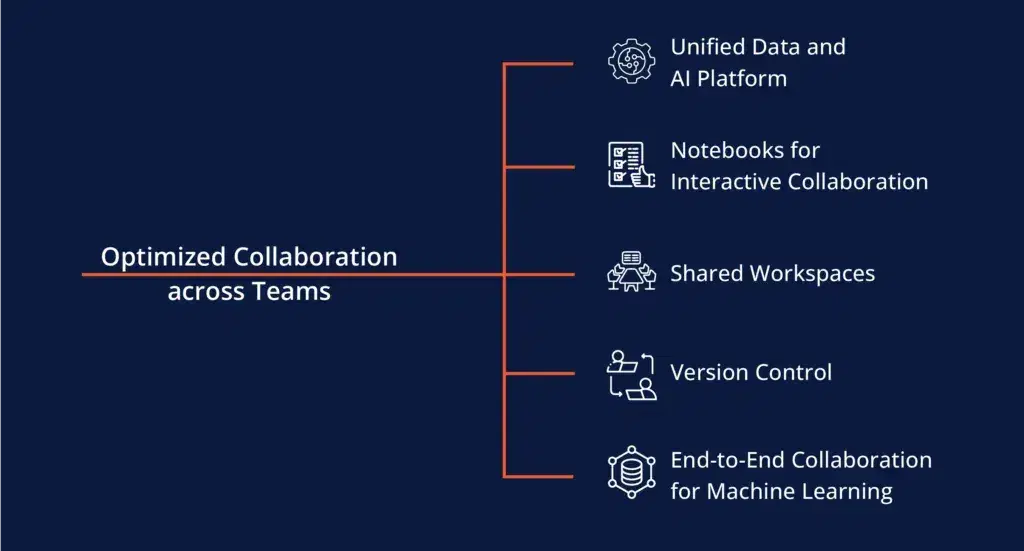

4. Optimized Collaboration Across Teams

- Shared Workspaces: Everyone can work on the same data, code, and models in one environment. It will make it easier to share information and results with others.

- Version Control: Databricks’ version control features allow teams to track changes and collaborate more efficiently. It will also avoid overwriting on each other’s work

5. Improve Your Performance with Databricks Clusters

- Cost-Effective: You only pay for the resources you use to assure you’re not wasting money on idle clusters.

- Real-Time Adjustments: If your workload increases or decreases Databricks automatically adjust the cluster size for better performance.