Upon requesting completion from OpenAI, the entire response is generated and delivered in a single payload by default. However, in today's fast-paced US market where milliseconds matter, this approach can create significant delays—sometimes taking 10-15 seconds for longer completions, which simply doesn't cut it for modern American businesses.

The game-changer? Streaming completions as they're generated in real-time. This breakthrough approach delivers responses instantly, allowing you to process and display content as it flows—a critical advantage that's reshaping how US companies interact with AI.

This is particularly useful for applications requiring immediate feedback from the model, for example interactive chatbots, live coding assistants or real-time content generation tools.

Why Choose OpenAI Chat?

OpenAI Chat stands as the gold standard in conversational AI, trusted by Fortune 500 companies and millions of users worldwide. With its cutting-edge GPT technology and unmatched natural language understanding, it delivers human-like interactions that drive real business results. In today's competitive market, OpenAI Chat isn't just a tool—it's your strategic advantage for superior customer engagement and operational efficiency.

Key Benefits

- Lightning-Fast Responses - Get instant, accurate answers with industry-leading response times that keep customers engaged

- Advanced Context Understanding - Handles complex conversations with remarkable accuracy, remembering context throughout entire interactions

- Enterprise-Grade Security - Bank-level encryption and compliance standards protect your sensitive business data and customer information

- Seamless Integration - Plug-and-play API integration with existing systems, reducing deployment time from months to days

What is Streaming?

Streaming is a method used in computing where data is sent in a continuous flow, allowing it to be processed in a steady and continuous stream. Unlike the traditional download and execute model where the entire package of data must be fully received before any processing can start, streaming enables the data to start being processed as soon as enough of it has been received to begin operations.

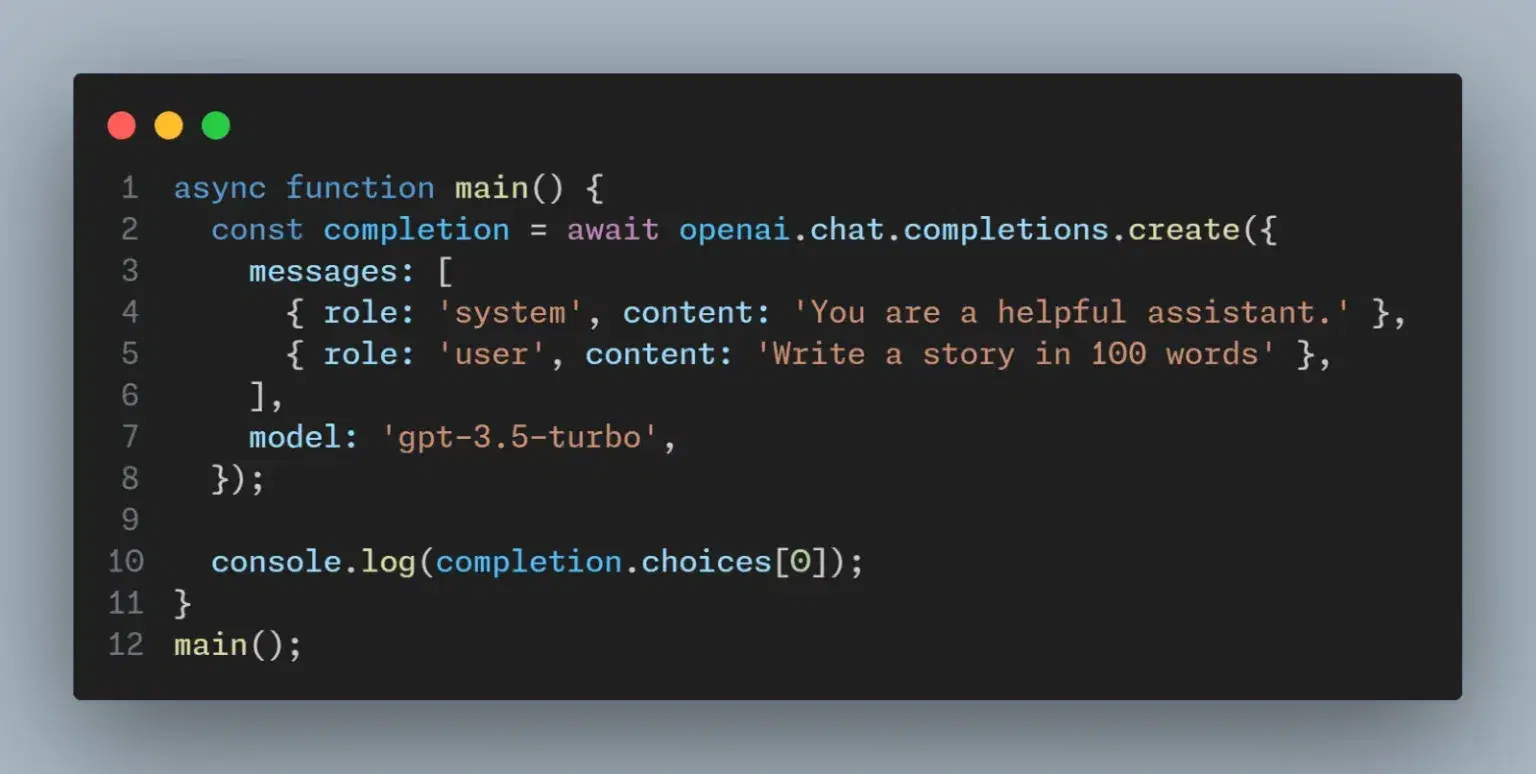

Example of Standard Chat Completion Response

The completion response is computed and after that it is returned.

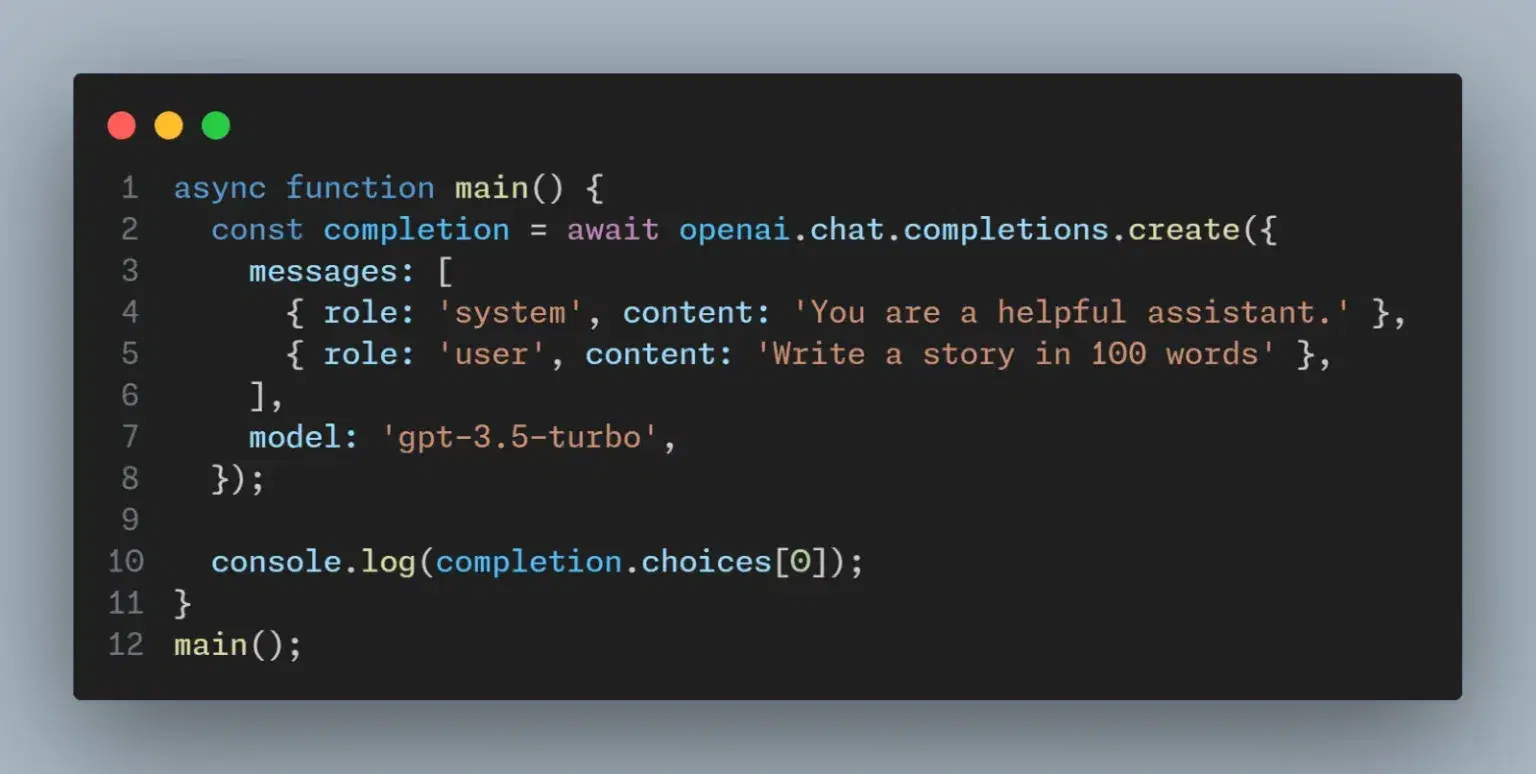

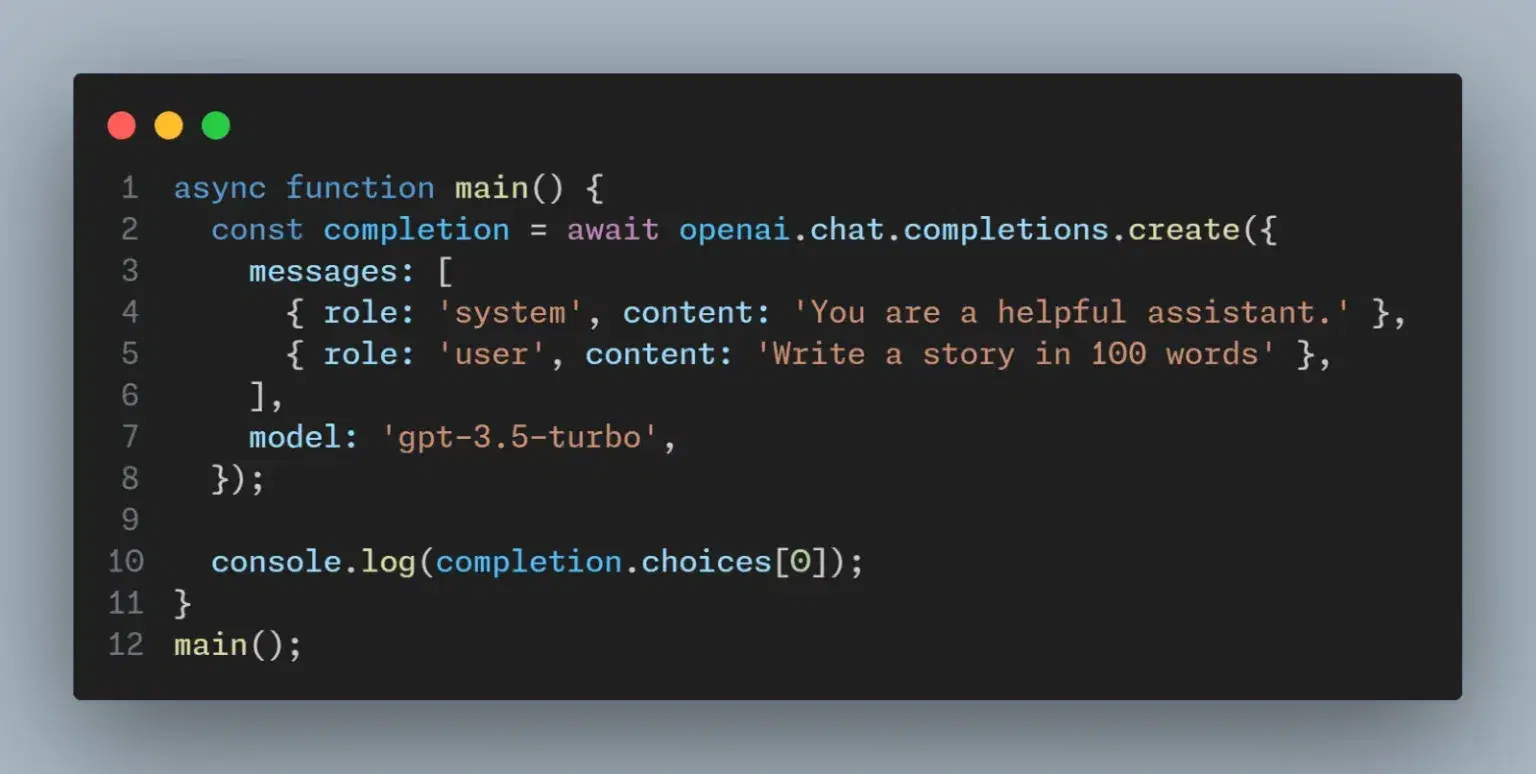

How to Stream a Chat Completion

To enable streaming with OpenAI's API, set the stream key to true in your API request. Here’s an example using JavaScript:

You can now process the incoming data incrementally:

This loop listens for data chunks sent by the OpenAI model. It checks if the model has finished generating content and then writes each received chunk to the response. This method ensures that the frontend can begin processing data without waiting for the entire content to be generated.

What is Server-Sent Events (SSE)

Server-Sent Events (SSE) are a standard allowing servers to push information to web clients. Unlike WebSocket, SSE is designed specifically for one-way communications from the server to the client. This makes SSE ideal for applications like live updates from social feeds, news broadcasting, or as in this case, streaming AI-generated text.

SSE works over standard HTTP and is straightforward to implement in modern web applications. Events streamed from the server are text-based and encoded in UTF-8, making them highly compatible across different platforms and easy to handle in client-side JavaScript.

Consuming Streamed Data on the Frontend

To manage streamed data on the frontend, you can use the Fetch API to make a request to the server endpoint that initiates the stream. Below is an example:

To handle streamed data on the frontend, use the Fetch API to connect to server endpoints that initiate real-time streams. In 2025's performance-driven landscape, the trend is shifting toward servers that ship less JavaScript to browsers. This makes streaming absolutely critical for modern web applications. This allows incremental data consumption as packets arrive. Apache Kafka, Flink, and Iceberg are moving from niche tools to "fundamental parts of modern data architecture”. The essential real-time streaming has become a competitive advantage.

Key Implementation Strategies:

- Enhanced Performance Architecture - Utilize TextDecoderStream for optimal UTF-8 decoding. This ensures seamless data transformation as streams flow in real-time without blocking the main thread

- Hybrid Rendering Approach - The 2025 consensus favors hybrid approaches between SSR and CSR. So, combining fast initial loads with dynamic streaming updates for superior user experience

- AI-Ready Pipeline Integration - Implement streaming architectures that support instant personalization and AI-driven content delivery. This can be important for modern customer engagement strategies

- Cross-Platform Scalability - Headless architecture enables faster content delivery, improved agility, and easier cross-platform deployment. With this you can make your solution streamline and future-proof across all different devices.

How to enable streaming in OpenAI chat API?

To enable streaming in the OpenAI Chat Completions API, simply set the "stream": true flag in your API request payload. This instructs the GPT model to return tokens incrementally as they're generated, instead of waiting for full completion. It’s a game-changer for real-time applications such as AI chatbot implementation, live content generators, and real-time AI assistants.

By using streaming mode, developers can significantly reduce latency and deliver instant AI responses, resulting in low-latency chat applications that feel fast, fluid, and human-like. This approach is especially powerful for building AI-powered user experiences that require responsiveness and contextual relevance, whether in e-commerce, tech support, or customer service chatbot performance environments.

What is SSE in OpenAI streaming?

SSE, or Server-Sent Events, is a browser-native technology used to stream AI responses from the server to the client over standard HTTP. In the context of OpenAI streaming, SSE provides a lightweight, real-time data delivery mechanism, perfect for sending incremental GPT-4 completions. Unlike WebSockets, SSE is unidirectional (server to client), which makes it ideal for scalable enterprise AI integration scenarios such as interactive assistants, monitoring dashboards, or notification services.

Why use Server-Sent Events with GPT?

Using SSE with GPT unlocks truly real-time AI experiences. Since GPT-based models like GPT-4 can generate long and complex outputs, SSE enables those responses to be delivered incrementally, improving perceived speed and boosting user engagement. This not only elevates customer satisfaction but also aligns with modern web trends—such as AI-driven personalization and hybrid rendering techniques.

The best part? SSE is simple to implement using the Fetch API, making it a developer-friendly option for businesses building scalable AI solutions that demand speed and clarity.

Final Summary

By using SSE, developers can create more engaging user experiences, with AI responses delivered in real-time. Whether you are building a chatbot, a live commentary tool or any other application that benefits from immediate textual output, streaming AI completions is a powerful feature to include in your development toolkit. Are you curious to know more about AI tools and technologies? Our next blog from this series will provide you with interesting and useful information on integrating large language models with external tools. Click here to improve your tech knowledge.